I am writing this blog post while preparing for the Facebook Data Engineering interview. The onsite round consists of various scenario based questions which largely deal with user activity. You are asked to write a SQL to calculate a metric related to the scenario and then a python script to perform similar calculations. There is also a Data Modeling interview which involves designing a Dimensional Model for a different product metric. I will cover data Modeling prep in a separate blog.

Before we proceed one of the book that I highly recommend to get a holistic view of modern day Data Engineering is Designing Data-Intensive Applications by Martin Kleppmann.

In order to prepare for the interview, I have come up with this idea of setting up a Facebook data model on my local SQLServer and then writing python functions to mimic user activity by randomly generating data for some fake users. Currently there are 13 tables in my data model and my main focus is to generate user activity so that I can practice writing queries to find Daily Active Users, Monthly Active Users etc.

I will keep updating this post for the type of questions I can answer from this data model. Once I am done with the whole script I will share it on Github for your perusal. I hope this is helpful for someone. So Let’s get started!!

Following are some of the questions that come to my mind for starters:

- Q1. Which user has most friends?

- Q2. How many friend requests were sent/accepted each month?

- Q3. How many friend requests were accepted the very next day ?

- Q4. What percent of the total requests were accepted very next day?

- Q5. What percentage of users don’t have any friends?

To answer above question I need a friend_requests table to store the user ids of sending and receiving users, date when the request was sent and the date when the request was accepted. The request accepted date can be NULL if the request was never accepted – Some people just send friend request to people they don’t know!! 🙂

Below is my table creation script and some inserts to generate fake data -(later my python function will take care of this, so that I have new data to play with)

create table friend_requests( userid int, friendid int, requested_on date, accepted_on date ) GO insert into friend_requests values(1,2,'01-Jul-2020','01-Jul-2020') GO insert into friend_requests values(1,3,'01-Jul-2020','02-Jul-2020') GO insert into friend_requests values(2,3,'02-Jul-2020','03-Jul-2020') GO insert into friend_requests values(1,4,'01-Aug-2020','02-Aug-2020') GO insert into friend_requests values(1,6,'02-Aug-2020','03-Aug-2020') GO insert into friend_requests values(1,8,'20-Aug-2020','21-Aug-2020') GO insert into friend_requests values(2,5,'02-Aug-2020','02-Aug-2020') GO insert into friend_requests values(2,7,'03-Aug-2020','03-Aug-2020') GO insert into friend_requests values(5,7,'20-Aug-2020','21-Aug-2020') GO insert into friend_requests values(4,6,'15-Aug-2020','15-Aug-2020') GO

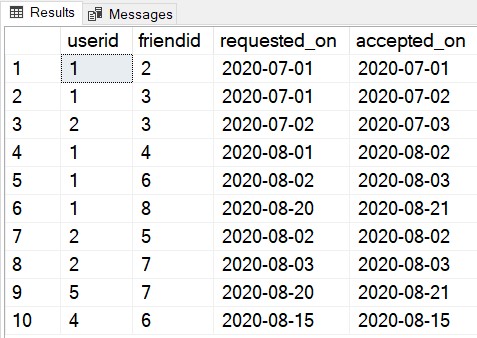

And below is what the data looks like:

Let’s tackle the first question

Q1. Which user has most friends?

Below is the query to answer this question. You have to use a union all to get all users from main userid and friendid columns and a group by to get counts for each of them.

--Who has the most friends?

SELECT TOP 1 userid,

SUM(friend_count) AS total_friends

FROM

(

SELECT userid,

COUNT(friendid) AS friend_count

FROM friend_requests

GROUP BY userid

UNION ALL

SELECT friendid AS userid,

COUNT(userid) AS friend_count

FROM friend_requests

GROUP BY friendid

) a

GROUP BY a.userid

ORDER BY 2 DESC;

And the answer is Userid 1

Next question

Q2. How many friend requests were sent/accepted each month?

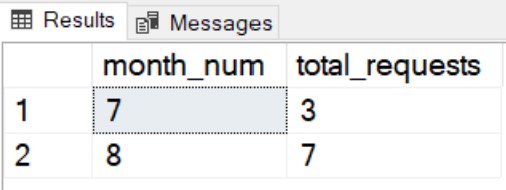

Let’s do the number of requests sent first. Below is the query to find number of friend requests sent per month. Fairly easy!

--How many friend requests were sent each month?

SELECT MONTH(requested_on) as month_num,

COUNT(*) AS total_requests

FROM friend_requests

GROUP BY MONTH(requested_on);

Below is the answer

Next up is How many friend requests were accepted each month?

Just change the date field in the above query from requested_on to accepted_on. Below is the query

--How many friend requests were accepted each month?

SELECT MONTH(accepted_on) as month_num,

COUNT(*) AS total_requests

FROM friend_requests

GROUP BY MONTH(accepted_on);

The answer is same as above.

Next up

Q3. How many friend requests were accepted the very next day ?

Given our table design, this question is fairly simple to answer

So here is the query

--How many friend requests were accepted the very next day? SELECT COUNT(*) FROM friend_requests WHERE accepted_on = DATEADD(day, 1, requested_on);

The answer is 6

Lets tackle the next question

Q4. What percent of the total requests were accepted very next day?

--What percent of the total requests were accepted very next day?

SELECT ROUND(SUM(CASE

WHEN accepted_on = DATEADD(day, 1, requested_on)

THEN 1

ELSE 0

END) * 100.0 / COUNT(*), 2) AS Perc_accepted_next_day

FROM friend_requests;

With above query you will get 60% as the answer. This query shows how to use a case statement to conditionally count a value.

Next question we can answer from this table

Q5. What percentage of users don’t have any friends?

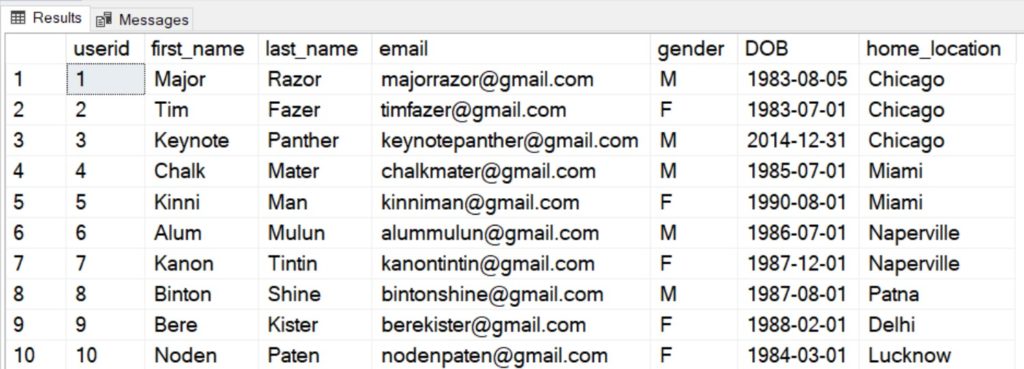

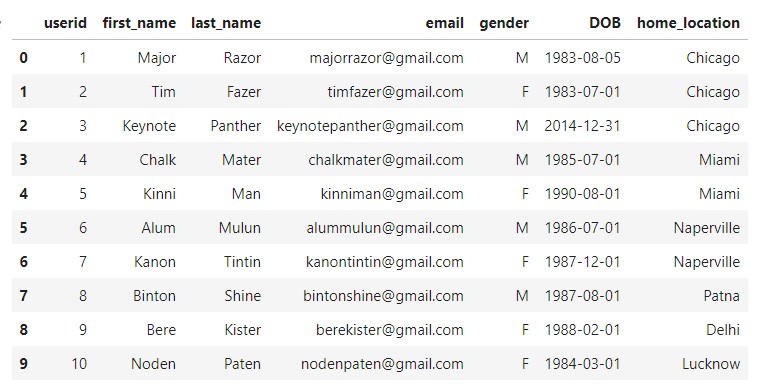

To answer this question I also need a users table which will store all the users of Facebook. Below is a screenshot of my users table along with the creation script.

CREATE TABLE users (userid INT, first_name VARCHAR(100), last_name VARCHAR(100), email VARCHAR(100), gender VARCHAR(1), DOB DATE, home_location VARCHAR(100) );

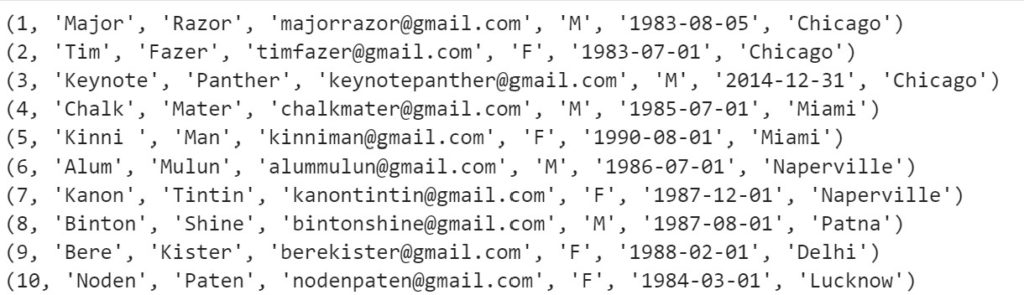

And here are the insert scripts for my initial 10 users

insert into dbo.users values (1,'Major','Razor','majorrazor@gmail.com','M','5-Aug-83','Chicago') GO insert into dbo.users values (2,'Tim','Fazer','timfazer@gmail.com','F','1-Jul-83','Chicago') GO insert into dbo.users values (3,'Keynote','Panther','keynotepanther@gmail.com','M','31-Dec-14','Chicago') GO insert into dbo.users values (4,'Chalk','Mater','chalkmater@gmail.com','M','1-Jul-85','Miami') GO insert into dbo.users values (5,'Kinni ','Man','kinniman@gmail.com','F','1-Aug-90','Miami') GO insert into dbo.users values (6,'Alum','Mulun','alummulun@gmail.com','M','1-Jul-86','Naperville') GO insert into dbo.users values (7,'Kanon','Tintin','kanontintin@gmail.com','F','1-Dec-87','Naperville') GO insert into dbo.users values (8,'Binton','Shine','bintonshine@gmail.com','M','1-Aug-87','Patna') GO insert into dbo.users values (9,'Bere','Kister','berekister@gmail.com','F','1-Feb-88','Delhi') GO insert into dbo.users values (10,'Noden','Paten','nodenpaten@gmail.com','F','1-Mar-84','Lucknow') GO

Below is how the data looks like in the table

Now to the query, Here we need to left join friend_requests table to users table twice, once on userid field and then on friendid field. Then we can use Case statement magic for selective counting to get our percentage of users with no friends. So here is the query

--What perc of users don't have any friends?

SELECT ROUND(COUNT(DISTINCT CASE

WHEN fr.userid IS NULL

AND fr2.userid IS NULL

THEN u.userid

ELSE NULL

END) * 100.0 / COUNT(DISTINCT u.userid), 2) AS perc_users_no_fr

FROM users u

LEFT JOIN friend_requests fr ON u.userid = fr.userid

LEFT JOIN friend_requests fr2 ON u.userid = fr2.friendid;

This will return 20% as user 9 and 10 don’t have an entry in the friend_requests table. Poor lonely people!. Don’t worry we will soon give them friends when my automated script is in place

Now moving on to the topic of this post. Creating an automated python script to mimic user activity, so that I can use the data to calculate various product metrics.

Python Script for User activity mimicry

Let’s now open a python editor. Jupyter lab is my choice for experimentation. VSCode when I am ready and confident to make it as an automatic script.

First thing my python script needs is to connect to the SQLServer database.

Below is the piece of code which will connect to your local sql server. Just replace the server name and database name in this script. Also I had to install pyodbc library using pip install pyodbc.

import pyodbc

conn = pyodbc.connect('Driver={SQL Server};'

'Server=<server_name>;'

'Database=<database_name>;'

'Trusted_Connection=yes;')

cursor = conn.cursor()

cursor.execute('SELECT * FROM dbo.users')

for row in cursor:

print(row)

And here is the output of this script. Test results from dbo.users table

Next step, let’s create the user_activity_log table on the database which will be populated using the python script

CREATE TABLE user_activity_log (sessionid INT, userid INT, deviceid INT, browserid INT, postid INT, stepid INT, ip_location VARCHAR(100), activity_time DATETIME, activty_type VARCHAR(100), activity VARCHAR(100) ); GO

This is the main log table which we will use for our further analysis and metric calculations. I want to make it as granular as possible. The most basic functionality that Facebook provides is to read,create and interact with a post. In the first step let’s work on creating posts from various users.

Let’s mimic the process of users uploading pictures to Facebook. In order to keep it simple let’s assume that uploading is a 3 step process where you tap(1) on photos button which will popup your phone’s camera roll, then you select(2) some photos and hit upload(3).

First let think about what each field will contain and how to generate fake data for each.

SessionID – This will be a running sequence but will remain same for the entire duration a user is performing actions related to a functionality.

UserID – Get a random userid from the dbo.users table

DeviceID – Get a random deviceid from the dbo.devices table

CREATE TABLE devices (deviceid INT, device_name VARCHAR(100), operating_system VARCHAR(100) ); GO

BrowserID – Get a random browserid from the dbo.browsers table

CREATE TABLE browsers (browserid INT, browser_name VARCHAR(100), browser_version VARCHAR(100) ); GO

PostID – This will depend on the nature of user activity. For the photo upload process it will be generated when user starts performing the photo upload process and will remain same for all the steps involved. Also an entry will be made to posts table when the final action for photo upload is taken by the user.

CREATE TABLE posts (postid INT, posted_by_user INT, posted_on DATE ); GO

StepID – Again for our 3 step photo upload process it will be either 1,2 or 3

ip_location – This is the location inferred by Facebook from your IP address. For simplicity lets decide on a list of cities and we will select a random city from it.

activity_time – The timestamp of the user action

activity_type – For photo upload process it will be photo_upload

activity – For photo upload process it will be either Tap Photo button, Select Photos, Upload photos

What metrics can this log table answer?

- Q1. How many users post photos to Facebook each day?

- Q2. How much time on average it takes users to upload photos to Facebook?

- Q3. How much time users spend on each step on average?

- Q4. How many users completed each step?

- Q5. What are the top 2 devices used by users to post photos?

- Q6. Which browser has the fastest completion time for the photo upload process?

- Q7. At which step do most users give-up posting photos to Facebook?

- Q8. What are the top 3 location from where most users post photos to Facebook?

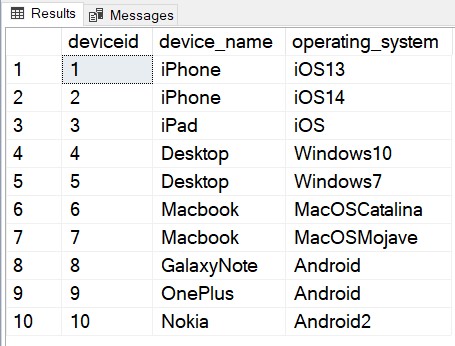

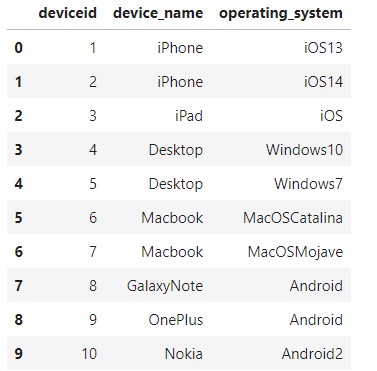

So let’s get started writing our script. Let’s start simple by inserting data to devices and browser tables using python script.

Insert into devices table

cursor.execute("Insert into dbo.devices values(1,'iPhone','iOS13')")

cursor.execute("Insert into dbo.devices values(2,'iPhone','iOS14')")

cursor.execute("Insert into dbo.devices values(3,'iPad','iOS')")

cursor.execute("Insert into dbo.devices values(4,'Desktop','Windows10')")

cursor.execute("Insert into dbo.devices values(5,'Desktop','Windows7')")

cursor.execute("Insert into dbo.devices values(6,'Macbook','MacOSCatalina')")

cursor.execute("Insert into dbo.devices values(7,'Macbook','MacOSMojave')")

cursor.execute("Insert into dbo.devices values(8,'GalaxyNote','Android')")

cursor.execute("Insert into dbo.devices values(9,'OnePlus','Android')")

cursor.execute("Insert into dbo.devices values(10,'Nokia','Android2')")

cursor.commit()

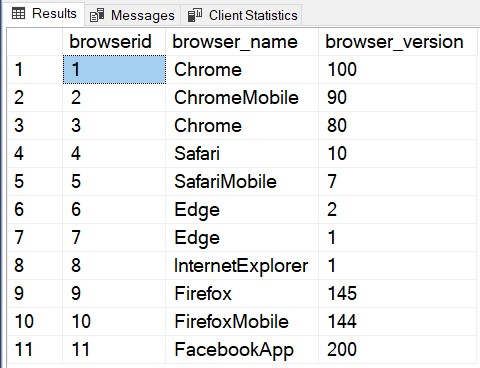

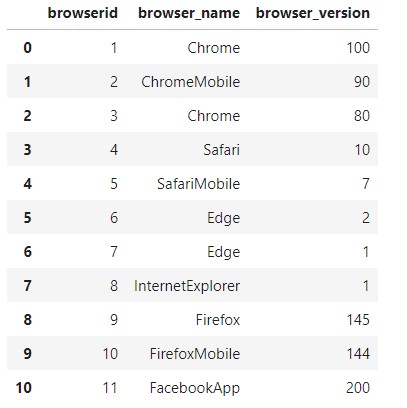

Insert into browsers table

cursor.execute("Insert into dbo.browsers values(1,'Chrome','100')")

cursor.execute("Insert into dbo.browsers values(2,'ChromeMobile','90')")

cursor.execute("Insert into dbo.browsers values(3,'Chrome','80')")

cursor.execute("Insert into dbo.browsers values(4,'Safari','10')")

cursor.execute("Insert into dbo.browsers values(5,'SafariMobile','7')")

cursor.execute("Insert into dbo.browsers values(6,'Edge','2')")

cursor.execute("Insert into dbo.browsers values(7,'Edge','1')")

cursor.execute("Insert into dbo.browsers values(8,'InternetExplorer','1')")

cursor.execute("Insert into dbo.browsers values(9,'Firefox','145')")

cursor.execute("Insert into dbo.browsers values(10,'FirefoxMobile','144')")

cursor.execute("Insert into dbo.browsers values(11,'FacebookApp','200')")

cursor.commit()

Now I am creating all the data structures which I will need in the function which inserts data into the user_activity_log table. I am using pandas dataframes to fetch and store data from sqlserver tables, out of which I will pick random values to create insert records for user_activity_log table.

So here are pandas commands to get all SQL server table data into pandas dataframes

Get Users data

import pandas as pd

users_query = """

SELECT *

FROM dbo.users

"""

df_users = pd.read_sql(users_query, conn)

df_users

Get Devices data

devices_query = """

SELECT *

FROM dbo.devices

"""

df_devices = pd.read_sql(devices_query, conn)

df_devices

Get Browsers data

browsers_query = """

SELECT *

FROM dbo.browsers

"""

df_browsers = pd.read_sql(browsers_query, conn)

df_browsers

I am feeling a bit lazy, for location_list, steps and activity types I will use python lists and dictionaries to store the data. Also I may need Sessions table to get the latest session id but I will decide that later.

location_list = ['Chicago','New York','San Francisco','Naperville','Miami','Kenosha','Toronto','New Delhi','Bangalore','Singapore']

step_dict = {1:'Tap photo button',2:'Select photos',3:'Upload'}

activity_types = ['photo_upload']

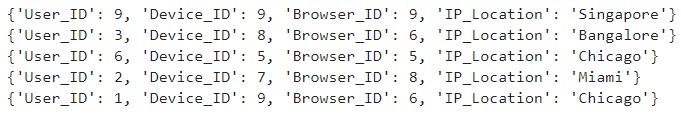

Now that all data structures are setup, I need to create a function to retrieve a random value for these variables in order to mimic random user activity

import random

def get_random_user_point():

userid_rnd = random.choice(df_users['userid'])

deviceid_rnd = random.choice(df_devices['deviceid'])

browserid_rnd = random.choice(df_browsers['browserid'])

ip_location_rnd = random.choice(location_list)

user_point_dict = {'User_ID':userid_rnd,

'Device_ID':deviceid_rnd,

'Browser_ID':browserid_rnd,

'IP_Location':ip_location_rnd}

print('User ID: ',userid_rnd,' Device ID: ',deviceid_rnd,' Browser ID: ',browserid_rnd,' IP Location: ',ip_location_rnd)

return user_point_dict

This is what is returned if you run this function 5 times:

print(get_random_user_point()) print(get_random_user_point()) print(get_random_user_point()) print(get_random_user_point()) print(get_random_user_point())

Now that basic commands are clear, I will create two functions one to generate insert statements for user_activity_log table based on the dictionary passed and second function to mimic the upload photos process which goes through 3 step photo upload process sequentially with artificial delay between steps.

I forgot to mention above, there are no actual photos involved here. I am just simulating the photo upload process by random users.

Below are the two functions:

#Function to create insert statements based on attribute dictionary

def create_ua_insert_stmt(attrib_dict):

ua_insert_cmd_start = "Insert into dbo.user_activity_log values("

session_id = attrib_dict['session_id']

user_id = attrib_dict['user_id']

device_id = attrib_dict['device_id']

browser_id = attrib_dict['browser_id']

post_id = attrib_dict['post_id']

step_id = attrib_dict['step_id']

ip_location = attrib_dict['ip_location']

activity_time = datetime.strftime(datetime.now(), "%m/%d/%Y %H:%M:%S")

activity_time = attrib_dict['activity_time']

activity_type = attrib_dict['activity_type']

activity = attrib_dict['activity']

ua_insert_cmd = (ua_insert_cmd_start+ str(session_id) + ","

+ str(user_id) + ","

+ str(device_id) + ","

+ str(browser_id) + ","

+ str(post_id) + ","

+ str(step_id) + ","

+ "'"+ip_location+"',"

+ "'" + activity_time + "',"

+ "'"+activity_type+"',"

+ "'"+activity+"')")

return(ua_insert_cmd)

from datetime import datetime, date

import time

#Function to mimic the upload photo process

def mimic_upload_photo(session_id):

activity_type ='photo_upload'

step_dict = {1:'Tap photo button',2:'Select photos',3:'Upload'}

time_delay = [1,2,3,4,5,10,15]

user_point = get_random_user_point()

for step in sorted(step_dict.keys()):

attrib_dict = {}

attrib_dict['session_id'] = session_id

attrib_dict['user_id'] = user_point['User_ID']

attrib_dict['device_id'] = user_point['Device_ID']

attrib_dict['browser_id'] = user_point['Browser_ID']

attrib_dict['post_id'] = 1

attrib_dict['step_id'] = step

attrib_dict['ip_location'] = user_point['IP_Location']

if step > 1:

delay = random.choice(time_delay)

print('Delaying for: {}'.format(delay))

time.sleep(delay) #Artificial Delay to mimic the time it takes to complete as step

step_time = datetime.now()

step_time = datetime.strftime(step_time, "%m/%d/%Y %H:%M:%S")

print(step_time)

attrib_dict['activity_time'] = step_time

attrib_dict['activity_type'] = activity_type

attrib_dict['activity'] = step_dict[step]

insert_stmnt = create_ua_insert_stmt(attrib_dict)

print(insert_stmnt)

cursor.execute(insert_stmnt)

conn.commit()

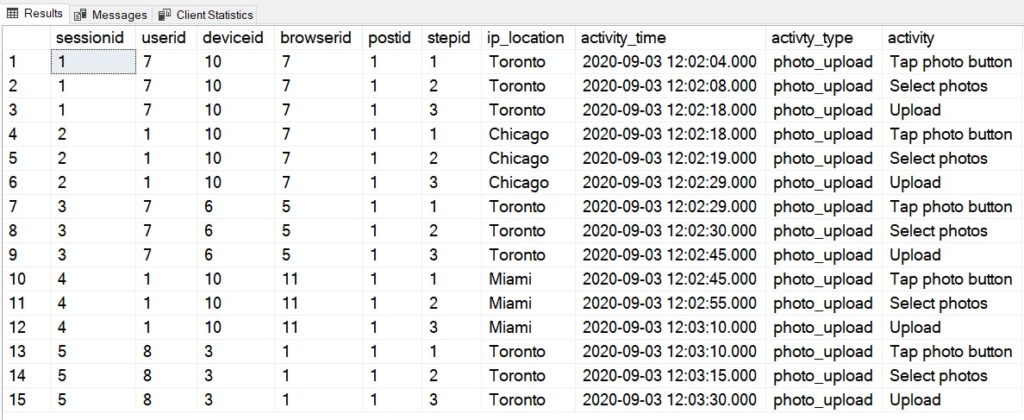

Below is the run log of the upload photos process when I ran it for 5 sessions

for sesion_id in range(1,6):

mimic_upload_photo(sesion_id)

09/03/2020 12:02:04 Insert into dbo.user_activity_log values(1,7,10,7,1,1,'Toronto','09/03/2020 12:02:04','photo_upload','Tap photo button') Delaying for: 4 09/03/2020 12:02:08 Insert into dbo.user_activity_log values(1,7,10,7,1,2,'Toronto','09/03/2020 12:02:08','photo_upload','Select photos') Delaying for: 10 09/03/2020 12:02:18 Insert into dbo.user_activity_log values(1,7,10,7,1,3,'Toronto','09/03/2020 12:02:18','photo_upload','Upload') 09/03/2020 12:02:18 Insert into dbo.user_activity_log values(2,1,10,7,1,1,'Chicago','09/03/2020 12:02:18','photo_upload','Tap photo button') Delaying for: 1 09/03/2020 12:02:19 Insert into dbo.user_activity_log values(2,1,10,7,1,2,'Chicago','09/03/2020 12:02:19','photo_upload','Select photos') Delaying for: 10 09/03/2020 12:02:29 Insert into dbo.user_activity_log values(2,1,10,7,1,3,'Chicago','09/03/2020 12:02:29','photo_upload','Upload') 09/03/2020 12:02:29 Insert into dbo.user_activity_log values(3,7,6,5,1,1,'Toronto','09/03/2020 12:02:29','photo_upload','Tap photo button') Delaying for: 1 09/03/2020 12:02:30 Insert into dbo.user_activity_log values(3,7,6,5,1,2,'Toronto','09/03/2020 12:02:30','photo_upload','Select photos') Delaying for: 15 09/03/2020 12:02:45 Insert into dbo.user_activity_log values(3,7,6,5,1,3,'Toronto','09/03/2020 12:02:45','photo_upload','Upload') 09/03/2020 12:02:45 Insert into dbo.user_activity_log values(4,1,10,11,1,1,'Miami','09/03/2020 12:02:45','photo_upload','Tap photo button') Delaying for: 10 09/03/2020 12:02:55 Insert into dbo.user_activity_log values(4,1,10,11,1,2,'Miami','09/03/2020 12:02:55','photo_upload','Select photos') Delaying for: 15 09/03/2020 12:03:10 Insert into dbo.user_activity_log values(4,1,10,11,1,3,'Miami','09/03/2020 12:03:10','photo_upload','Upload') 09/03/2020 12:03:10 Insert into dbo.user_activity_log values(5,8,3,1,1,1,'Toronto','09/03/2020 12:03:10','photo_upload','Tap photo button') Delaying for: 5 09/03/2020 12:03:15 Insert into dbo.user_activity_log values(5,8,3,1,1,2,'Toronto','09/03/2020 12:03:15','photo_upload','Select photos') Delaying for: 15 09/03/2020 12:03:30 Insert into dbo.user_activity_log values(5,8,3,1,1,3,'Toronto','09/03/2020 12:03:30','photo_upload','Upload')

And here is the data in the sql server table user_activity_log

I am happy with what I have achieved until now. I will need to schedule this script to run continuously. Also I will need to modify it to generate some historical data and may be parallelize it (but that I can do later after the interview maybe:) ).

I also forgot to simulate incomplete photo upload process which I will work on later.

I am now running it for 100 sessions and then we will answer the metrics question I listed above. Will take a while!!!

Okay, I managed to add code which will mimic the incomplete photo upload process for random sessions. Below is the same function mimic_upload_photo with lets break code towards the end. If the step id is less than 3 and random choice is less than 3, the session will be abruptly terminated.

from datetime import datetime, date

import time

#Function to mimic the upload photo process

def mimic_upload_photo(session_id,random_break=False):

activity_type ='photo_upload'

step_dict = {1:'Tap photo button',2:'Select photos',3:'Upload'}

time_delay = [1,2,3,4,5,10,15]

user_point = get_random_user_point()

for step in sorted(step_dict.keys()):

attrib_dict = {}

attrib_dict['session_id'] = session_id

attrib_dict['user_id'] = user_point['User_ID']

attrib_dict['device_id'] = user_point['Device_ID']

attrib_dict['browser_id'] = user_point['Browser_ID']

attrib_dict['post_id'] = 1

attrib_dict['step_id'] = step

attrib_dict['ip_location'] = user_point['IP_Location']

if step > 1:

delay = random.choice(time_delay)

print('Delaying for: {}'.format(delay))

time.sleep(delay) #Artificial Delay to mimic the time it takes to complete as step

step_time = datetime.now()

step_time = datetime.strftime(step_time, "%m/%d/%Y %H:%M:%S")

#print(step_time)

attrib_dict['activity_time'] = step_time

attrib_dict['activity_type'] = activity_type

attrib_dict['activity'] = step_dict[step]

insert_stmnt = create_ua_insert_stmt(attrib_dict)

#print(insert_stmnt)

print(session_id)

cursor.execute(insert_stmnt)

conn.commit()

if step < 3 and random_break:

lets_break = random.choice(range(1,10))

if lets_break <3:

print('Incomplete photo upload process')

break

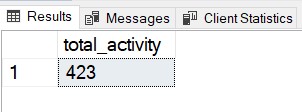

Below I am running it for 50 more sessions with random_break = True.

for sesion_id in range(101,151):

mimic_upload_photo(sesion_id,random_break=True)

Here is the log. It got abruptly ended almost 18 times out of 50.

101 Delaying for: 3 101 Delaying for: 2 101 102 Delaying for: 4 102 Delaying for: 10 102 103 Delaying for: 10 103 Delaying for: 3 103 104 Delaying for: 2 104 Delaying for: 10 104 105 Delaying for: 1 105 Delaying for: 15 105 106 Delaying for: 1 106 Delaying for: 1 106 107 Incomplete photo upload process 108 Delaying for: 3 108 Delaying for: 3 108 109 Delaying for: 5 109 Incomplete photo upload process 110 Delaying for: 3 110 Delaying for: 3 110 111 Delaying for: 3 111 Delaying for: 4 111 112 Delaying for: 10 112 Delaying for: 5 112 113 Delaying for: 4 113 Delaying for: 15 113 114 Delaying for: 10 114 Delaying for: 2 114 115 Delaying for: 3 115 Delaying for: 4 115 116 Delaying for: 2 116 Delaying for: 4 116 117 Incomplete photo upload process 118 Delaying for: 3 118 Delaying for: 1 118 119 Delaying for: 2 119 Delaying for: 4 119 120 Delaying for: 1 120 Delaying for: 10 120 121 Incomplete photo upload process 122 Incomplete photo upload process 123 Delaying for: 15 123 Incomplete photo upload process 124 Delaying for: 1 124 Delaying for: 4 124 125 Delaying for: 2 125 Delaying for: 10 125 126 Delaying for: 10 126 Incomplete photo upload process 127 Delaying for: 10 127 Delaying for: 4 127 128 Delaying for: 10 128 Incomplete photo upload process 129 Delaying for: 5 129 Delaying for: 15 129 130 Delaying for: 5 130 Delaying for: 5 130 131 Delaying for: 4 131 Delaying for: 10 131 132 Incomplete photo upload process 133 Delaying for: 1 133 Incomplete photo upload process 134 Delaying for: 2 134 Delaying for: 15 134 135 Delaying for: 4 135 Delaying for: 3 135 136 Delaying for: 1 136 Incomplete photo upload process 137 Delaying for: 1 137 Delaying for: 15 137 138 Incomplete photo upload process 139 Delaying for: 2 139 Delaying for: 3 139 140 Delaying for: 5 140 Delaying for: 4 140 141 Delaying for: 4 141 Delaying for: 1 141 142 Delaying for: 15 142 Delaying for: 4 142 143 Delaying for: 15 143 Incomplete photo upload process 144 Incomplete photo upload process 145 Delaying for: 10 145 Incomplete photo upload process 146 Delaying for: 1 146 Delaying for: 15 146 147 Delaying for: 15 147 Incomplete photo upload process 148 Incomplete photo upload process 149 Delaying for: 1 149 Delaying for: 2 149 150 Incomplete photo upload process

And here is the count in my user_activity_log table

So now I have 423 records to play with and total of 150 random user sessions with some of them incomplete. I think I have good enough data now to answer the metric questions.

I will end this post here because it is getting too long. I will answer the metrics questions in the next post. Hope you enjoyed this journey with me. I loved it!!

Here is a link to the second post

Hi Raghav,

Thanks for sharing this .. its really helpful.

in “Q5. What percentage of users don’t have any friends?”, I think we need to account for the people who have sent friend request but didn’t got accepted and they don’t have any friends will not be taken care.

What do you think?